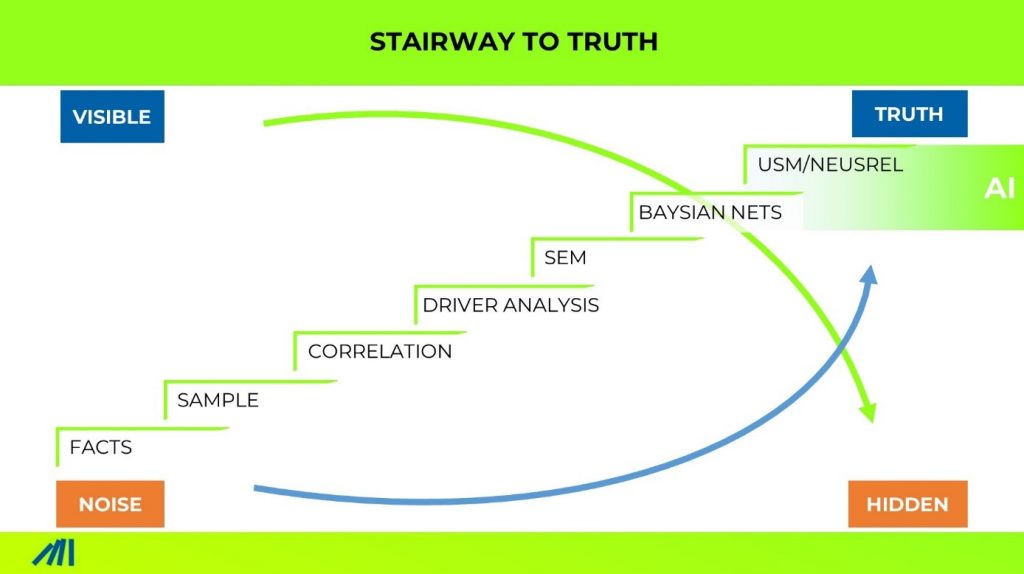

1. Facts

If you see that a plane has crashed in the news, you learn one thing: it’s dangerous to fly. 42% of people are anxious about flying, while 2% have a clinical disorder.

This is a fact but not the truth. Flying is by a factor 100.000 safer than driving a car.

When US bombers came back in world war two, the army analyzed where the bombers got hit and applied ammunition.

They acted on facts, but the initiative was useless because the analysis did not uncover the truth.

It’s impossible to understand why bombers do not come back without analyzing those who don’t come back.

In the same way, it’s impossible to understand why customers churn if you only analyze churners. It could be that churners and customers complain about the same thing.

2. Sample

What it takes instead is always a representative sample selection of facts. Facts are just particular snapshots from the truth, like a pixel out of a picture. It might be true. But it, alone, is meaningless.

In 1936, one of the most extensive poll surveys made it to the news. 2.4 million US Americans had been surveyed over the telephone. The prediction was overwhelming. Roosevelt will be the apparent loser with only 40% of the votes.

Finally, Roosevelt won with nearly 60%. How could polling fail so miserably?

The sample was not representative. At that time, telephone owners had more fiscal means. This correlated with the likelihood to vote for democrats.

Just a sample of pixels can paint a picture. But if pixels are drawn just from one side of the picture, you are likely to read a different “truth”.

3. Correlation

The journey to truth does not end at a well-sampled “picture”. Why? Ask yourself, what do business leaders really want to learn?

What’s more interesting?: “What is your precise market share?” or “how can you increase market share?”

The first question asks for an aggregated picture from facts.

The second asks for an invisible insight that must be inferred from facts. It is the question of what causes outcomes.

“Age correlates with buying lottery tickets” – From this correlation, many lottery businesses still conclude today that older people are more receptive to playing the lottery.

The intuitive method of learning on causes is the correlation. It is what humans do day in day out. It works well in environments where effects are following shortly after the cause and when at the same time, there is just one cause that is changing.

It often works well in engineering, craftsmanship, and administrations.

It works miserably for anything complex. Marketing is complex, Sales is complex, HR is complex.

“Complex” means that many things influence results. Even worse, the effects are heavily time lags.

Back to the lottery. The truth is that younger people are more likely to start buying lottery tickets. Why then are older more often playing? Purchasing a lottery ticket is a habit. Habits form over time (=age). This is amplified with the experience of winning, which again is a function of time.

4. Modeling

To fight spurious correlation, science developed multivariate modeling. The simplest form is a multivariate regression.

The idea: if many things influence at the same time, you need to look at all possible drivers at the same time to single out the individual contribution.

The limitations of conventional multivariate statistical methods are that it relies on rigid assumptions, such as

- No Interactions: All drivers are independent of each other

- Linearities: The more, the better

- All drivers have the same distribution

Sure, many advancements had been developed, but always you needed to know the specificity upfront. You need to know what kind of nonlinearity are what is interacting with what and how.

No surprise that this turned out to be highly impractical. Businesses get challenges and need to solve them within weeks, not years.

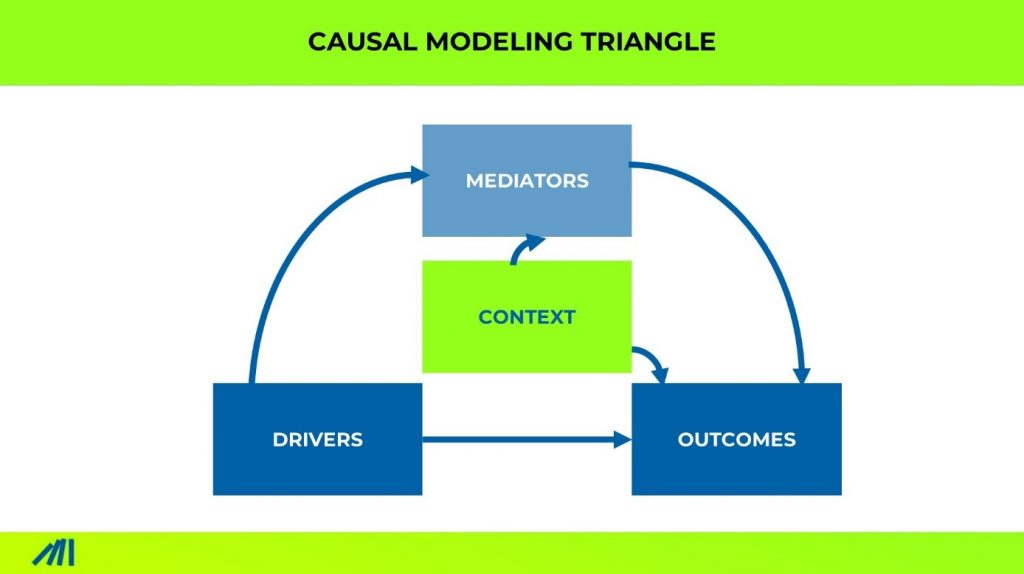

5. Causal Modeling

It turns out that the majority of business questions concern the causes of success.

When you want to drive business impact, you need to search for causal truth. Science, Academia, Statistics, and Data Science shy away from “causality” like a cat from freshwater.

Because you can not finally prove causality, they feel safer neglecting it. They can ignore it as they are not measured with business impact.

All conventional modeling shares a further fundamental flaw: the belief in the input-output logic. This only measures direct, not indirect, causal impact (best case).

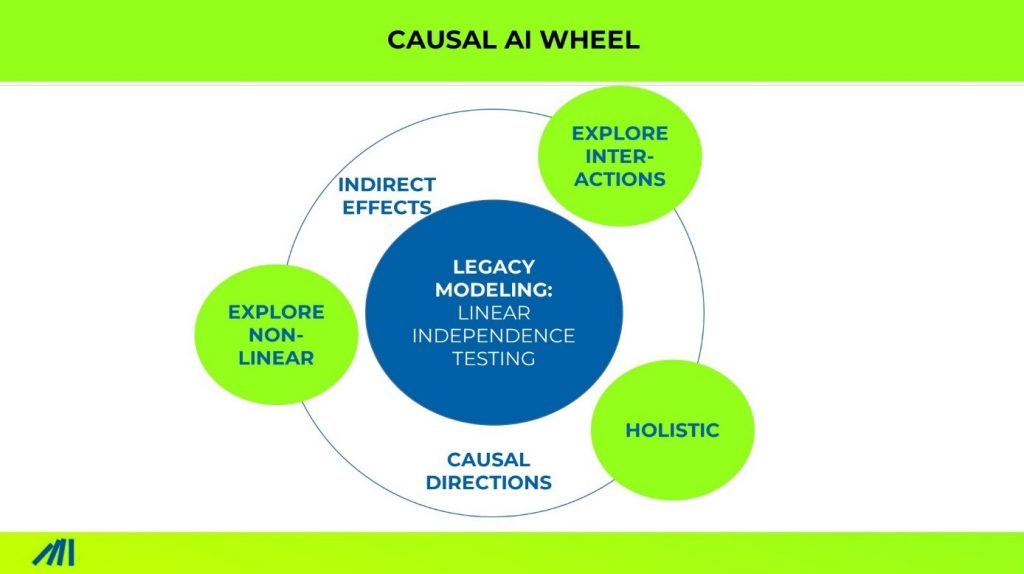

Causal modeling uses a network of effects, not just input versus output. Further, it provides methods to test the causal direction.

6. Causal AI

Causal AI is now combining Causal Modeling with Machine Learning. This has huge consequence on the power of the insights. It eliminates all those limitations that modeling always had.