When I speak about causality in talks, I typically hear the objection: “yes, but it’s impossible to be sure that those two assumptions have met.”

Fair point. But what’s the alternative?

Guesswork?

BS storytelling?

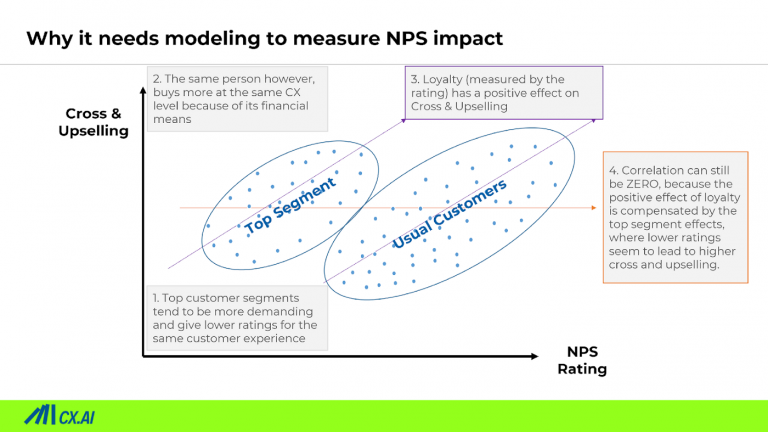

Back to Neanderthals spurious correlations?

This is so hard to accept: while insights about facts are obvious, insights about (cause-effect) relationships can NOT ultimately be “proven”. You need to infer them from data.

When doing so the only thing you can do is to make LESS mistakes.

Latest Causal Machine Learning methods enable us to:

- Avoid using theories as much as possible (when in lack of data, they can still be very valuable)

- Avoid risk for confounder effects by integrating more variables (plus other analytical techniques)

- Avoid assuming wrong causal direction by combining direction testing method with related theories about the fact.

Leave Neanderthal times to the past and take the latest tools and become plumper of insights 😊

The good news is…

You can NOT make a mistake by just starting to improve.

The benchmark is not to arrive at the ultimate truth. That’s an impossible and impractical goal. The benchmark is to get insights that are more likely to drive results.

Causation is an endlessly important concept that everyone seems to avoid — simply because it’s not understood.

You can drive change by educating your peers, colleagues and supervisors. The first step is to share this article. 😉

“There is nothing more deceptive than an obvious fact”

Sherlock Holmes

Literature:

Buckler, F./Hennig-Thurau, T. (2008): Identifying Hidden Structures in Marketing’s Structural Models Through Universal Structure Modeling: An Explorative Neural Network Complement to LISREL and PLS, in: Marketing Journal of Research and Management, Vol. 4, S. 47–66.

Granger, C. W. J. (1969). “Investigating Causal Relations by Econometric Models and Cross-spectral Methods”. Econometrica. 37 (3): 424–438. doi:10.2307/1912791. JSTOR 1912791.