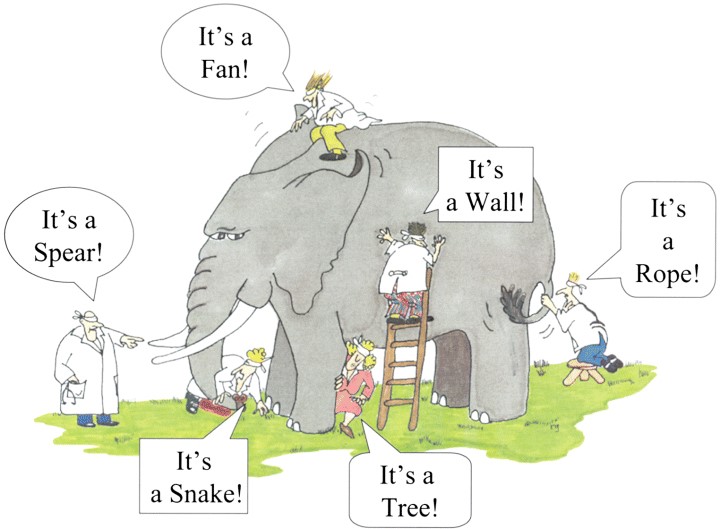

RAS impacts how you interpret research:

2018 Kevin Heinevetter did an interesting study as a part of his bachelor thesis. He interviewed 30 customer insights leaders and gathered their unbiased pains:

A majority claimed that many research studies are commissioned just to “prove” a point the business partner wants to get evidenced. Once the survey finds the opposite, the study will “disappear” and not be brought to the attention of others.

The same happens with studies that have an unpleasant outcome. There is a tendency to change research methodology until the results fit the interest.

When presenting the same insights to different audiences, you can yield opposite reactions as described in the example of my ESOMAR presentation.

If the insights are not helpful for your interests, they are boring. If they are against their interests, they will doubt its validity.

The insight that the RAS unconsciously guides us means that all this mess is nobody’s fault, nor are managers have immoral or unethical intentions.

They have good intentions, but good intentions are not a good predictor of good outcomes. Good introspective is.

RAS impacts how you “sell” insights:

The same study from Kevin found that customer insights leaders see themselves as the guardian of truth. They enjoy being a Sherlock Holmes digging for valuable insights.

As such, they have an intrinsic interest in bringing truth to practice and having business partners appreciate it.

The good news, there are clear strategies that help overcome this.

RAS also impacts how you gain insights!

The most intuitive and most respected way of gaining insights about customers is to sit down with them, ask them open questions, and actively listen to them. It’s called “qualitative research” and it feels like everyone can and should do it.

When I started my career as a management consultant, I qualitatively interviewed many target decision-makers for our clients. Over and over again, I realized that after two or three interviews, I had a clear opinion in mind, and the following interviews, I was catching validation for those beliefs.

When you now know that his own RAS bias the interviewer, it’s now a questionable exercise. It’s not even a directionally objective collection of insights anymore. It’s a cherry-picking exercise and this article here goes deeper into why this will produce wrong insights and believes.

Actually, it’s a raffle. You don’t know what you are getting.

I regularly look at findings where we categorize qualitative customer response data about a why-question and trying to predict the related outcome (e.g., loyalty). Result: The verbally expressed “why” is not correlating at all with what proves to be important (evidence by predictive modeling).

In short: There is a massive mismatch between what people say and what they mean. It is naive to believe it is good enough to just to ask customers. (Spoiler: the alternative is not to not ask customers!)

Your RAS takes what you focus on and creates a filter for it. It then sifts through the data and presents only the pieces that are important to you. All of this happens without you noticing.

Your focus is defined by what’s interesting to you and what your BELIEF is.